Are your dry quenching refractory bricks cracking every time they face rapid temperature changes? This persistent issue of thermal shock damage remains a headache in harsh industrial environments where temperature fluctuations can exceed 800°C. To scientifically evaluate the real stability of your refractory materials, understanding the comprehensive laboratory testing workflow is critical.

Dry quenching systems experience extreme and sudden temperature differences during operation, often cycling between temperatures near 1500°C and rapid cooling phases. This repetitive thermal stress causes refractory bricks to develop cracks or even fail prematurely, leading to costly maintenance and unexpected shutdowns.

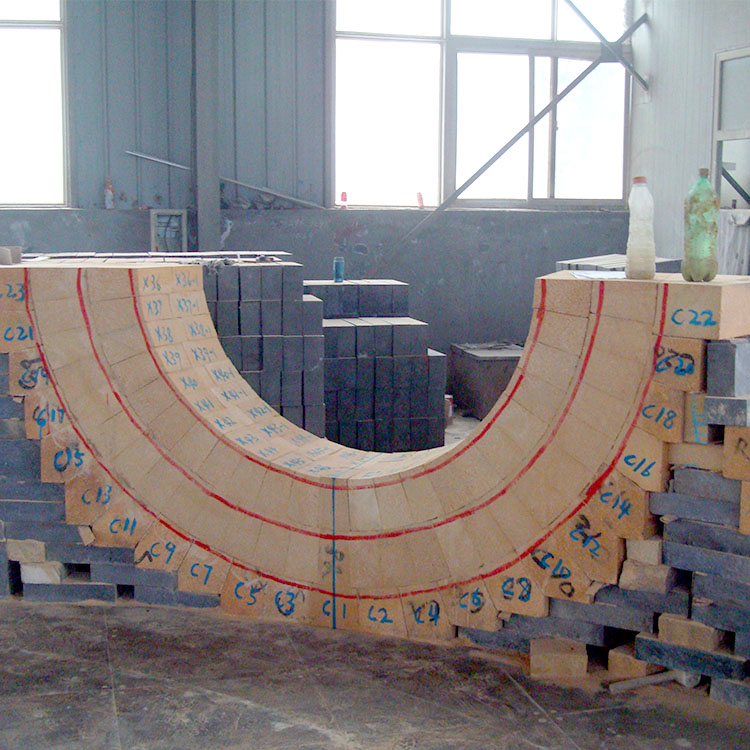

The cornerstone of assessing thermal shock resistance is the water quench test following a ΔT=850°C standard. Here’s how it works:

This method replicates operational stresses and helps quantify resistance to thermal shock cracking by measuring crack lengths, number of cracks, and weight loss after testing.

Lab results are foundational but need on-site validation. Engineers monitor crack growth rates and spalling areas in-service using periodic inspections and non-destructive techniques:

Understanding these parameters is essential to predict when to perform repairs or replacements, optimizing operational uptime and safety.

Many manufacturers and engineers mistakenly use only load softening temperature (LST) as an indicator of refractory performance. However, LST reveals the deformation temperature under load but does not directly measure resistance to rapid thermal cycling. Prioritizing thermal shock indicators prevents:

Field engineers increasingly use infrared (IR) thermal cameras to detect hotspots indicative of localized overheating, which is a precursor to thermal shock damage. Here are effective tips for your teams:

Consider a leading steel mill that faced repeated unscheduled shutdowns due to refractory failures in its dry quenching unit. Initially, they selected bricks based on load softening temperature alone, neglecting thermal shock parameters. After implementation of comprehensive thermal shock testing and switching to a high-alumina mullite brick tailored for superior thermal shock resistance, the plant achieved:

Q1: How often should thermal shock testing be conducted for quality control?

A: Ideally, batch-wise testing is recommended during production, and post-installation monitoring quarterly for critical units.

Q2: Can offline lab data fully predict in-service refractory lifespan?

A: Lab data provides baseline resistance, but field data, including IR imaging, is essential for complete lifecycle assessment.

Q3: What is a cost-effective way to implement thermal shock diagnostics?

A: Start with IR imaging combined with a few targeted lab tests to understand your specific material behavior under operating conditions.

Feel free to leave your questions or share your experiences in the comments below.